The study was carried out by researchers from The University of Electro-Communications in Tokyo and the University of Michigan.

The vulnerability has been detailed in anew papertitled Light Commands: Laser-Based Audio Injection Attacks on Voice-Controllable Systems.

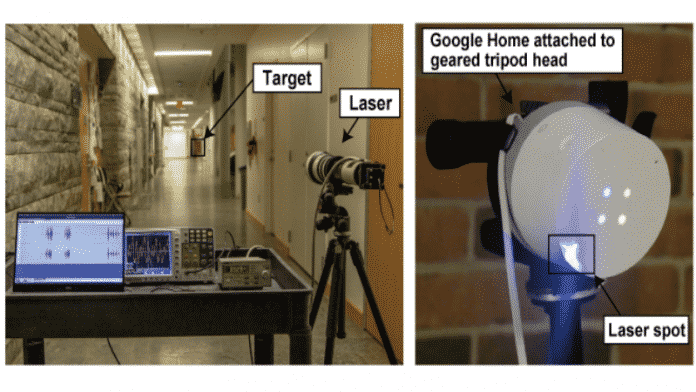

The flaw dubbed as Light Commands mainly uses lasers to manipulate smart speakers with fake commands.

Microphones convert sound into electrical signals.

Google who is aware of the research confirmed that it is closely reviewing this research paper.

While the vulnerability certainly sounds alarming, its not an easy exploit to pull off.

source: www.techworm.net